In today’s hyper-competitive digital economy, businesses are increasingly turning to a powerful, albeit intangible, asset to fuel their growth: data. For organizations seeking to unlock transformative insights, a big data strategy is no longer a luxury but a fundamental necessity for survival and success. This strategic plan provides a comprehensive framework for how a company will collect, store, manage, analyze, and ultimately monetize the vast torrents of information flowing through its operations. By systematically implementing this strategy, businesses across all industries can move beyond simple intuition, making smarter decisions, optimizing operations, and creating innovative products and services that deliver a significant competitive edge.

Understanding the ‘Why’: Defining Your Business Objectives

Before a single server is provisioned or a line of code is written, the most critical step in any big data initiative is to define its purpose. A strategy built on technology for technology’s sake is destined to fail. Instead, the foundation must be a clear understanding of the business problems you aim to solve or the opportunities you wish to seize.

Begin by asking fundamental questions. Are you trying to reduce customer churn by identifying at-risk clients? Do you want to optimize your supply chain by predicting demand fluctuations? Is the goal to increase marketing ROI by delivering hyper-personalized campaigns? Each of these goals provides a clear direction for your data efforts.

It is crucial to involve stakeholders from every corner of the organization in this initial phase. Executives in the C-suite can align the strategy with overall corporate goals, while leaders in marketing, sales, finance, and operations can identify specific pain points and potential use cases within their domains. Tying each initiative to measurable Key Performance Indicators (KPIs)—such as a 15% reduction in operational costs or a 10% increase in customer lifetime value—makes the strategy tangible and its success demonstrable.

Step 1: Identify and Prioritize Data Sources

With clear objectives in place, the next step is to identify the data required to achieve them. Big data is often characterized by the “Three V’s”: Volume (the sheer amount of data), Velocity (the speed at which it’s generated), and Variety (the different formats it comes in). Modern interpretations have added more V’s, like Veracity (its accuracy) and Value (its ultimate usefulness), which are central to a successful strategy.

Data sources can be broadly categorized as internal or external, and understanding both is key to building a complete picture.

Internal Data Sources

This is the data your organization already generates and controls. It often includes structured data from Customer Relationship Management (CRM) and Enterprise Resource Planning (ERP) systems, which contain valuable transactional and customer information. It also encompasses machine-generated data, such as server logs, clickstream data from your website, and readings from Internet of Things (IoT) sensors on a factory floor. Finally, there is a wealth of unstructured, human-generated data in emails, customer support tickets, and internal documents that can be mined for sentiment and insights.

External Data Sources

Looking outside your organization can enrich your internal datasets and provide crucial context. This includes publicly available information like social media feeds, which can reveal brand sentiment and emerging trends. Government databases offer demographic and economic data, while third-party data providers can supply market research, competitor intelligence, and weather data that might impact your sales or logistics. The key is to select external sources that directly complement your internal data and support your business objectives.

Step 2: Choosing the Right Technology Stack

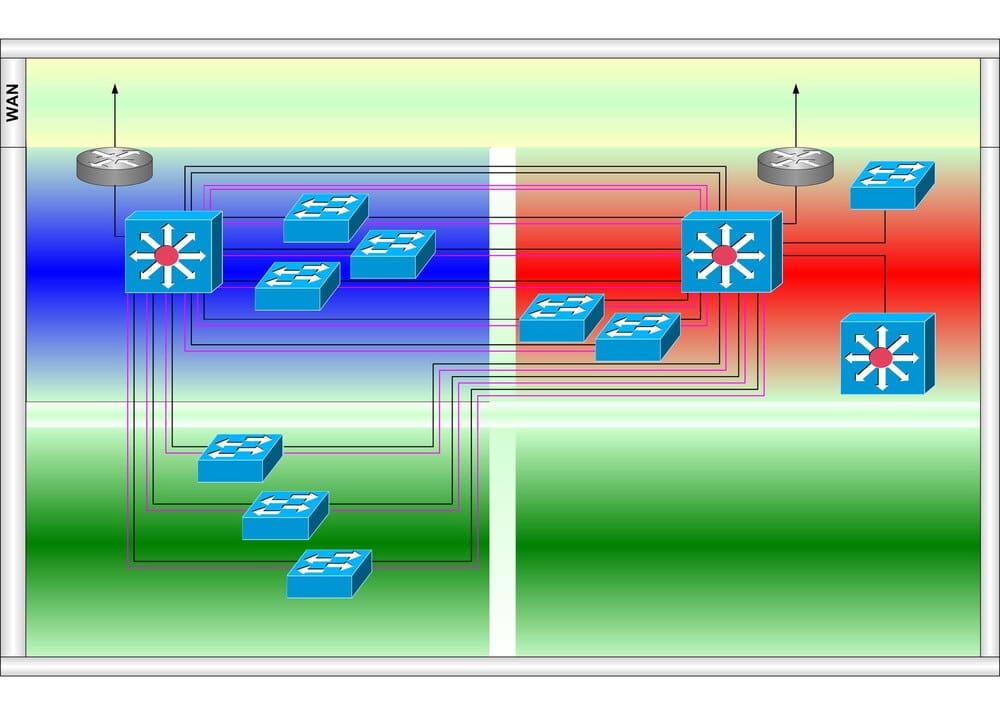

Once you know what data you need, you must decide how to handle it. Your technology stack is the collection of tools and platforms that will form the backbone of your big data infrastructure. This choice should be driven by your specific use cases, budget, technical expertise, and scalability needs.

Data Storage and Management

A foundational decision is where to store your data. A data warehouse is a traditional repository for structured, filtered data that has already been processed for a specific purpose. In contrast, a data lake is a vast pool of raw data in its native format, offering more flexibility for future analysis. Many modern architectures use both, employing the data lake for storage and exploration and the warehouse for structured reporting.

Cloud platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform have become the de facto choice for big data storage, offering scalable and cost-effective solutions like Amazon S3, Azure Blob Storage, and Google Cloud Storage.

Data Processing

Getting data from its source to a state where it can be analyzed requires processing. Batch processing frameworks like Apache Spark are ideal for handling large volumes of data where real-time results are not critical, such as generating monthly sales reports. For use cases that demand immediate insights, like fraud detection or real-time personalization, stream processing technologies like Apache Kafka and Apache Flink are essential.

Data Analytics and Visualization

The final layer of the stack is what turns processed data into actionable insights. Business Intelligence (BI) and visualization tools like Tableau, Microsoft Power BI, and Looker are used to create dashboards and reports that make complex data understandable for business users. Increasingly, this layer also includes sophisticated Machine Learning (ML) and Artificial Intelligence (AI) platforms that can uncover predictive patterns and automate decision-making processes.

Step 3: Building the Right Team and Skills

The most advanced technology stack is useless without the right people to manage and leverage it. Building a capable data team is a non-negotiable component of a successful big data strategy. Several key roles form the core of a data-focused organization.

Key Roles in a Data Team

The Data Architect designs the overall blueprint for the data infrastructure, ensuring it is scalable, robust, and secure. Data Engineers are the builders; they construct and maintain the data pipelines that move and transform data. Data Scientists are the explorers, using advanced statistical and machine learning techniques to build predictive models and extract deep insights. Finally, Data Analysts bridge the gap between data and business, interpreting results and communicating them clearly to decision-makers.

For larger organizations, a Chief Data Officer (CDO) provides executive-level leadership, championing the data strategy and fostering a data-driven culture. Companies can build this team by hiring new talent, upskilling existing employees with relevant technical skills, or partnering with specialized external consultants to bridge immediate gaps.

Step 4: Establishing Data Governance and Security

As data becomes more central to your operations, managing it responsibly becomes paramount. Data governance and security are not afterthoughts; they are critical frameworks for ensuring your data is a reliable and protected asset.

Data Governance Framework

A governance framework establishes the rules of the road for your data. It defines clear data ownership, sets standards for data quality to ensure accuracy and consistency, and creates policies for who can access what data and under what circumstances. Strong governance builds trust in your data, which is essential for its adoption across the organization.

Security and Compliance

In an era of frequent data breaches and stringent regulations, security is non-negotiable. This involves implementing technical safeguards like encryption for data at rest and in transit, robust access controls, and regular security audits. Furthermore, your strategy must ensure compliance with data privacy regulations like the EU’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), which dictate how personal data must be handled.

Step 5: Execute, Analyze, and Iterate

A big data strategy is not a static document but a living plan that must evolve. The final step is to put the plan into action, measure its impact, and continuously refine your approach.

Start with a Pilot Project

Rather than attempting a massive, organization-wide rollout from day one, begin with a manageable pilot project. Select a use case that is high-impact but relatively low-complexity. Successfully delivering on a pilot—for example, a project that improves marketing campaign targeting—can demonstrate tangible ROI and build momentum, securing the buy-in needed for more ambitious initiatives.

Measure and Communicate Results

Continuously track the KPIs you established at the very beginning. Use the data visualization tools in your tech stack to create clear, compelling dashboards that communicate your progress to stakeholders. Translating technical achievements into business impact is crucial for maintaining support and funding.

The ultimate goal is to foster a truly data-driven culture where curiosity and evidence-based decision-making are the norm, not the exception. This involves providing employees at all levels with the training and tools they need to use data confidently in their daily work. As your business evolves and new challenges arise, your big data strategy must adapt with it.

Embarking on a big data journey requires a significant investment of time, resources, and strategic focus. By following a structured path—defining clear objectives, identifying the right data, selecting appropriate technology, building a skilled team, ensuring robust governance, and iterating continuously—organizations can transform data from a simple byproduct of business into their most powerful strategic asset. This transformation is the key to unlocking new efficiencies, deeper customer understanding, and sustainable growth in the digital age.